SpLang

Combining Learning and Reasoning for Sp</strong>atial Language Understanding

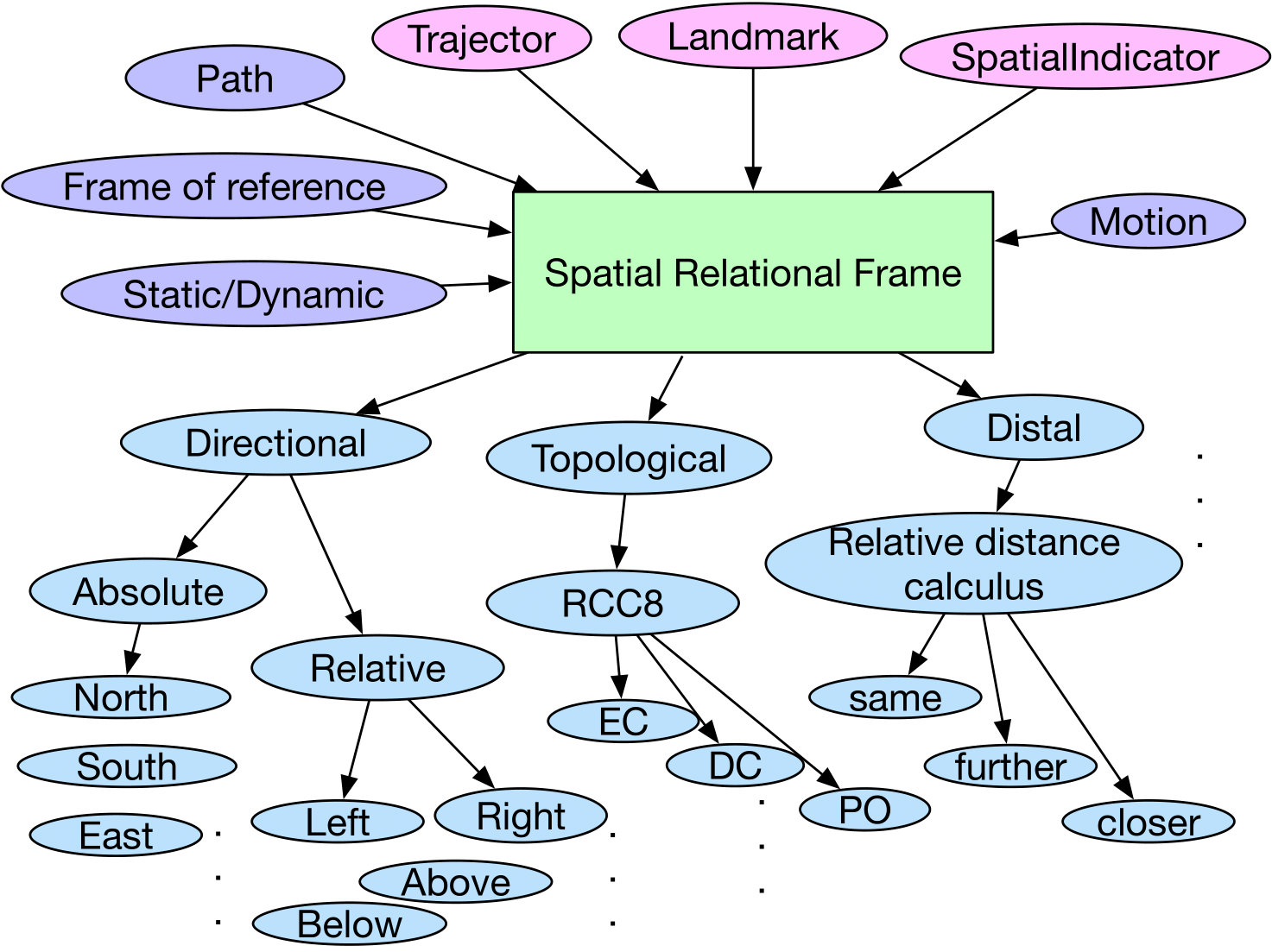

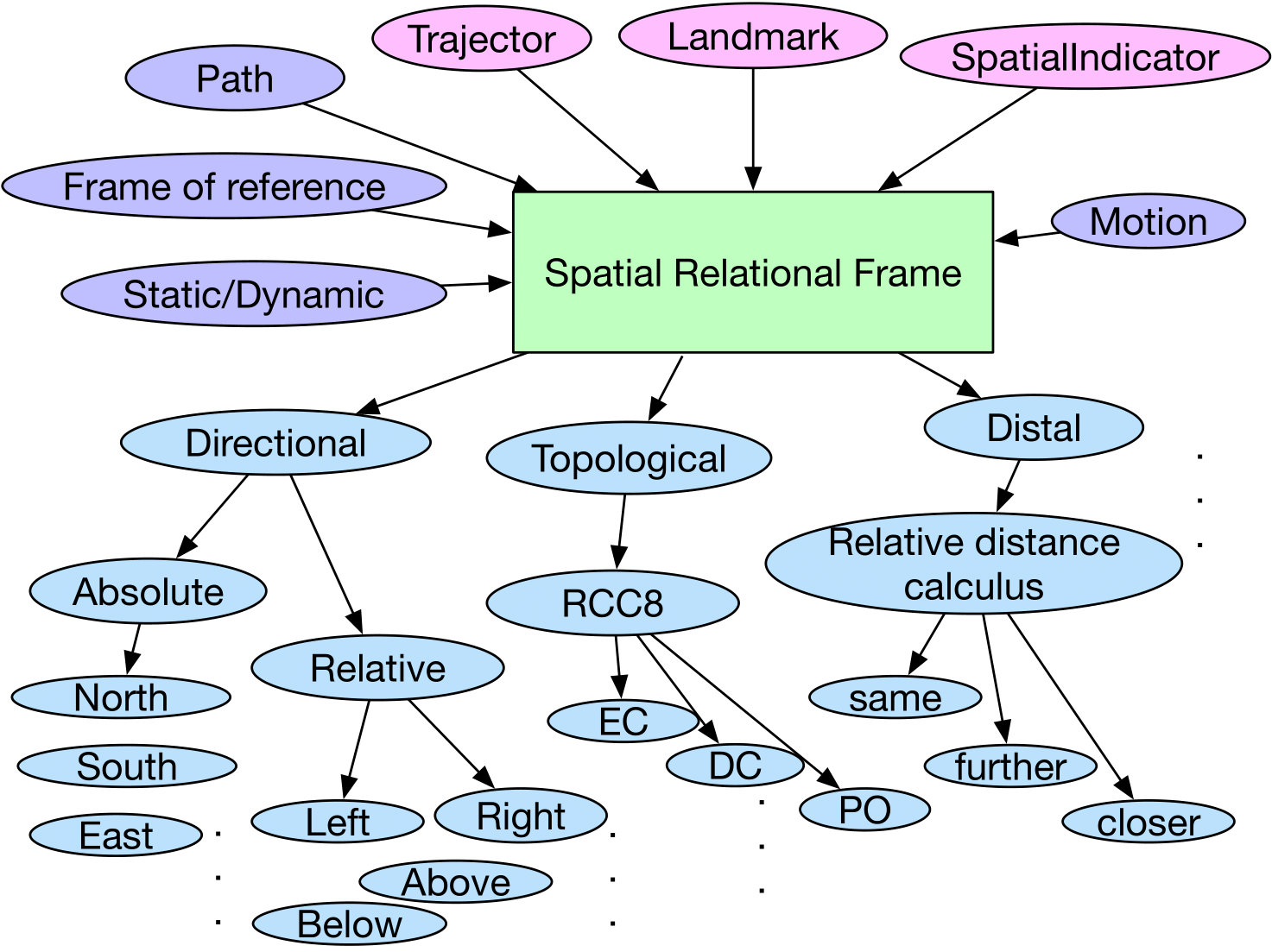

Our goal is to investigate the generic domain-independent spatial meaning representation schemes that can help in tasks that involve spatial language understanding. We exploit domain knowledge including ontologies that convey common-sense, the axioms of spatial qualitative reasoning and external visual resources in an integrate learning and reasoning framework. We develop deep structured and relational learning techniques for this goal and investigate the interaction between learning and spatial reasoning. We evaluate the capabilities of deep structured models in learning qualitative representations and the impact of those representations on textual and visual question answering tasks, focusing on locative questions.

Team Members

- Parisa Kordjamshidi.

- Roshanak Mirzaee, Yue Zhang, Chen Zheng.

Source of funding

- National Science Foundation (NSF).

List of publications:

- Towards Navigation by Reasoning over Spatial Configurations. Yue Zhang, Quan Guo and Parisa Kordjamshidi GitHub. Download.

- Relational Gating for ''What If'' Reasoning. Chen Zheng and Parisa Kordjamshidi. GitHub. Download.

- SpartQA: A Textual Question Answering Benchmark for Spatial Reasoning. Roshanak Mirzaee, Hossein Rajaby Faghihi, Qiang Ning, and Parisa Kordjamshidi. GitHub. Download.

- SRLGRN: Semantic Role Labeling Graph Reasoning Network. Chen Zheng and Parisa Kordjamshidi. GitHub. Download.

- Cross-Modality Relevance for Reasoning on Language and Vision. Chen Zheng, Quan Guo and Parisa Kordjamshidi Download.

- From Spatial Relations to Spatial Configurations. Soham Dan, Parisa Kordjamshidi, Julia Bonn, Archna Bhatia, Martha Palmer and Dan Roth Download.